A sneak peek inside Zyte Smart Proxy Manager : The world’s smartest web scraping proxy network

“How does Zyte Smart Proxy Manager work?” is the most common question we get asked from customers who after struggling for months (or years) with constant proxy issues, only to have them disappear completely when they switch to Zyte Smart Proxy Manager.

Today we’re going to give you a behind the scenes look at Zyte Smart Proxy Manager so you can see for yourself why it is the world’s smartest web scraping proxy network and the only solution that allows you to completely forget about proxies, and focus on the data instead.

What is Zyte Smart Proxy Manager?

First off, Zyte Smart Proxy Manager is not like your average proxy solutions. Instead of just providing users with a batch of IPs and maybe rudimentary proxy rotation functionality, Zyte Smart Proxy Manager is a complete proxy management solution for web scraping at scale.

Simply make an HTTP request to Zyte Smart Proxy Manager's single endpoint API, and Zyte Smart Proxy Manager will return a successful response. No need to worry about managing proxies.

Zyte Smart Proxy Manager achieves this by managing thousands of proxies so that you don’t have to: carefully rotating, throttling, blacklisting, and selecting the optimal IPs to use for any individual request to give the optimal results with the maximum efficiency.

Completely removing the hassle of managing IPs and allowing you to focus on the data, not proxies.

From day one, Zyte Smart Proxy Manager was designed exclusively for web scrapers. It has been optimized to ensure users can reliably extract the data they need at scale, without having to worry about bans or failed requests.

How does Zyte Smart Proxy Manager work?

Zyte Smart Proxy Manager completely removes the hassle of managing proxy pools and all the headaches that go with it - rotating proxies, defining ban rules for each website, managing user agents, throttling, etc.

Without Zyte Smart Proxy Manager you would make a request to your own proxy pool and hope that it is successful.

Instead, make a request to the Zyte Smart Proxy Manager API and it takes care of the rest.

Once, the HTTP request is sent to the API, Zyte Smart Proxy Manager's intelligent IP management layer kicks in. This layer automatically determines the target domain and selects the optimal proxy pool and request configuration to yield the best results. Configuring the delay between requests, user agents, cookies, etc. to ensure successful requests.

However, when the request is blocked is where Zyte Smart Proxy Manager's capabilities really shine through.

Instead of just returning a failed request to the client, Zyte Smart Proxy Manager will change the proxies, request delays, user agents, etc. as required to return a successful request to the client.

Zyte Smart Proxy Manager handles all your proxy headaches

Instead of having to manually design, build and configure your own proxy management infrastructure, with Zyte Smart Proxy Manager all the individual elements that make a great proxy management solution tick are available out of the box and completely automated for your requests.

Throttling/delays

Zyte Smart Proxy Manager automatically finds the sweet spot between crawl speed and reliability, the two most important factors for any web scraping professional.

As a web scraper, in most cases, we want to extract our target data as fast as possible. However, with high speed comes an increased risk that the requests will be blocked. As a result, finding the crawl speed sweet spot is our #1 priority.

If you designed your own proxy management solution, you embark on a tedious process of trial and error to find this elusive crawl speed sweet spot. Which is very time consuming and often doesn’t yield the optimal results.

In contrast, Zyte Smart Proxy Manager is optimized for successful requests and automatically finds this sweet spot for you. Zyte Smart Proxy Manager constantly throttles the request delays to ensure the maximum successful request throughput for your crawl. With the precise delay being determined by the target website, the estimated load on the website, and it’s ban history. Zyte Smart Proxy Manager selects the optimal throttling configuration to give you the maximum request reliability.

Ban detection

Key to Zyte Smart Proxy Manager's functionality is its ban detection functionality, comprising of a constantly updated database of over 130+ ban types, response codes, and captchas developed as a result of Zyte Smart Proxy Manager serving over 8 billion requests per month.

Zyte Smart Proxy Manager monitors every request for bans, even those that are not immediately obvious from the response code. When detected, Zyte Smart Proxy Manager automatically retries the request with a different IP (while also modifying the delay) and puts the banned IP in what we call “Jail Time”: A state where that same proxy won’t be used to make a request to that same website for a variable period of time.

Even so, you are able to add your own custom ban rules to cope with specific project requirements and challenges.

Cookie & session handling

Depending on your project requirements your spiders may need to store and manage cookies so that they can navigate to the target data and parse the data.

With Zyte Smart Proxy Manager, these cookie and session requirements will be handled for you, eliminating the need to handle them on the crawler side.

However, you can easily disable this functionality to allow you to manually configure your crawler to manage cookies and sessions if your project requirements necessitate custom cookie and session handling.

Browser-like headers

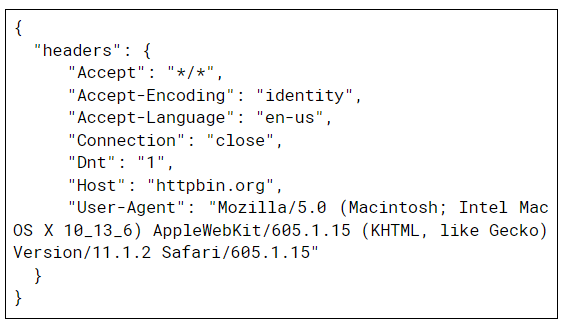

Zyte Smart Proxy Manager also uses browser like headers to give your requests more human-like behavior.

When a request is received, Zyte Smart Proxy Manager will select the most appropriate header from a browser header pool removing the need for you to worry about header configuration and rotation.

In certain circumstances, you might like to manually control the headers being used with your requests. As a result, Zyte Smart Proxy Manager's header functionality can be disabled by simply passing it a single command with your API request.

Headless browser support

With the increasing complexity of modern websites (most require 50 to 150 single requests to load) scraping efficiently at scale can become a real challenge.

To help combat this problem, Zyte Smart Proxy Manager comes with headless browser support. Enabling you to easily integrate headless browsers such as Splash and Puppeteer with your crawlers, significantly reducing the time it takes to get successful requests.

If you’d like to learn more about Zyte Smart Proxy Manager's full feature set then be sure to check out Zyte Smart Proxy Manager’s technical documentation.

Built for reliability at scale

Despite having all this advanced functionality available out of the box, where Zyte Smart Proxy Manager really shines though is the reliability and predictability it gives you when scraping at scale.

If you start a web scraping project, oftentimes you can get away with a simple proxy pool at the start but as you scale you will have to develop all the functionality listed above or else suffer from constant reliability issues.

In contrast, from day one Zyte Smart Proxy Manager has been built with scraping at scale in mind.

Zyte Smart Proxy Manager's #1 priority is to give you maximum request reliability. As a result, Zyte Smart Proxy Manager has been painstakingly designed to ensure it will consistently return successful requests to your crawlers no matter the website being scraped.

And if your request volume increases, you don’t need to worry about getting more proxies or reconfiguring your proxy management logic. Instead, you simply increase your allocated number of concurrent requests and Zyte Smart Proxy Manager will handle the rest. Giving users great predictability of their web scraping costs as they scale.

Ensuring that developers and companies don’t need to invest huge amounts of time, engineering resources, and money into developing their own scalable proxy management solutions. Allowing you to focus on the data instead.

Try Zyte Smart Proxy Manager today!

If you’re tired of troubleshooting proxy issues and would like to give Zyte Smart Proxy Manager a try, then sign up today and you can cancel within 14 days if Zyte Smart Proxy Manager isn’t for you, or schedule a call with our crawl consultant team.

Zyte Smart Proxy Manager is great for web scraping teams (or individual developers) that are tired of managing their own proxy pools and that is ready to integrate an off-the-shelf proxy API into their web scraping stack that only charges for successful requests.

It is also a perfect fit for larger organizations with mission-critical web crawling requirements looking for a dedicated crawling partner who’s tools and team of crawl consultants can help them crawl more reliably at scale, build custom solutions for their specific requirements, help debug any issues they may run into when scraping the web, and offer enterprise SLAs.

At Zyte we always love to hear what our readers think of our content and any questions you might. So please leave a comment below with what you thought of the article and what you are working on.